|

Homepage |

Description

Welcome to the class website for Machine Learning: Special Topics . This graduate seminar course covers special topics in machine learning aiming to develop materials from the perspective of mathematical foundations and theory behind learning algorithms. The course also discusses practical computational aspects and applications. Please check back for updates as new information may be posted periodically on the course website.

We plan to use the books

- The Elements of Statistical Learning: Data Mining, Inference, and Prediction, by Hastie, Tibshirani, Friedman.

- Foundations of Machine Learning, by Mehryar Mohri, Afshin Rostamizadeh, and Ameet Talwalkar.

The course will also be based on recent papers from the literature and special lecture materials prepared by the instructor. Topics may be adjusted based on the backgrounds and interests of the class.

Syllabus [PDF]

Topic Areas

- Foundations of Machine Learning / Data Science

- Historic developments and recent motivations.

- Concentration Inequalities and Sample Complexity Bounds.

- Statistical Learning Theory, PAC-Learnability, related theorems.

- Rademacher Complexity, Vapnik–Chervonenkis Dimension.

- No-Free-Lunch Theorems.

- High Dimensional Probability and Statistics.

- Optimization theory and practice.

- Supervised learning

- Linear methods for regression and classification.

- Model selection and bias-variance trade-offs.

- Support vector machines.

- Kernel methods.

- Parametric vs non-parametric regression.

- Neural network methods: deep learning.

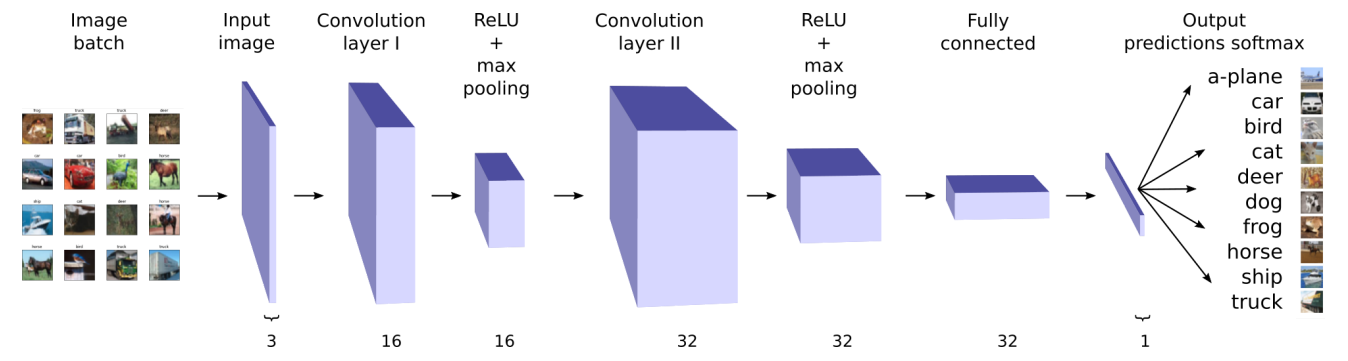

- Convolutional Neural Networks (CNNs).

- Recurrent Neural Networks (RNNs).

- Unsupervised learning

- Clustering methods

- Kernel principal component analysis, and related methods

- Manifold learning

- Neural network methods.

- Autoencoders (AEs)

- Generative Adversarial Networks (GANs)

- Additional topics

- Stochastic approximation and optimization.

- Variational inference.

- Generative Methods: GANs, AEs.

- Graphical models.

- Randomized numerical linear algebra approximations.

- Dimensionality reduction.

Prerequisites:

Linear Algebra, Probability, and ideally some experience programming.

Slides

| - | Statistical Learning Theory, Generalization Errors, and Sampling Complexity Bounds | [Large Slides] [PDF] [MicrosoftSlides] |

|---|---|---|

| - | Complexity Measures, Radamacher, VC-Dimension | [Large Slides] [PDF] [MicrosoftSlides] |

| - | Support Vector Machines, Kernels, Optimization Theory Basics | [Large Slides] [PDF] [MicrosoftSlides] |

| - | Regression, Kernel Methods, Regularization, LASSO, Tomography Example | [Large Slides] [PDF] [MicrosoftSlides] |

| - | Unsupervised Learning, Dimension Reduction, Manifold Learning | [Large Slides][PDF] [MicrosoftSlides] |

| - | Neural Networks and Deep Learning Basics | [PDF] [GoogleSlides] |

| - | Convolutional Neural Networks (CNNs) Basics | [PDF] [GoogleSlides] |

| - | Recurrent Neural Networks (RNNs) Basics | [Large Slides] [PDF] [MicrosoftSlides] |

| - | Generative Adversarial Networks (GANs) | [Large Slides] [PDF] [MicrosoftSlides] |

Supplemental Materials:

- Python Documentation.

- Numpy Tutorial

- Anaconda and Tutorial

- Image Classification using Convolutional Neural Networks

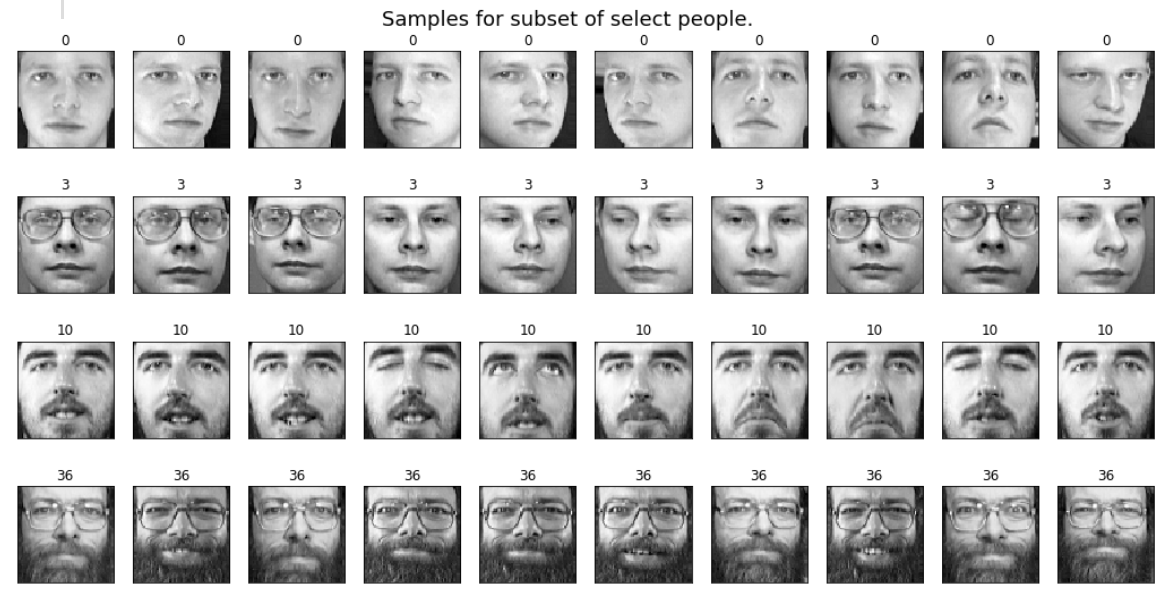

- Facial Recognition and Feature Extraction

Additional Materials

- You can find additional materials on my related data science course websites

Homework Assignments:

Turn all homeworks on Canvas by 5pm on the due date. Note: You can miss one problem set without any penalty.

- Problem Set 1 (due 10/14): [PDF]

- Problem Set 2 (due 11/4): [PDF]

- Problem Set 3 (due 11/18): [PDF]

- Problem Set 4 (due 12/2): [PDF]

Edit: Main | Menu | Description | Info | Image | CS | Log-out